Tag: toetus

How friendly AI friend is

Leading figures in the field of artificial intelligence development, including Elon Musk and Apple co-founder Steve Wozniak, are proposing a pause in the development of AI technologies until reliable security protocols are developed and implemented. As a highly technological foundation, we believe that the most effective way to protect ourselves is to stay informed about what is happening.

The first thing we did after reading the open letter and some background information was to ask GPT 3.0 what it thinks about the threat to humanity from future versions of itself. Unfortunately, there is nothing new about what futurists were saying 20 years ago, what education specialists are saying, and what we all know: the threat of technology begins in the mind of the person trying to turn it into a tool to achieve their own, and sometimes, unfortunately, even military goals.

Yes, AI can be used for disinformation. Yes, today’s AI tools can violate your privacy – read the disclaimers “Developers can read your texts for AI development purposes”. The threat of more global security when AI can be used for cyber attacks. But what is currently being hyped in the media is the rise of inequality: if access to AI tools is limited to certain segments of society, this will further exacerbate the segregation of access to technology, reliable information, and as a result, to benefits. Hence the completely justified threat of job loss – automation, robotisation is already affecting the employment structure in industry (welders are replaced by welding robot operators, for example).

The released AI language model GPT 3.0 has drawn our attention to the fact that white-collar work can be done by machines much faster and sometimes even better, including written and oral translations, analytics and forecasting, content creation, information search and analysis, and optimization of many processes. Of course, it’s frightening to think about what will be left for us to do. Especially if AI becomes a black box for us, where it’s unclear what and under what conditions goes in.

The most contradictory aspect of AI technology is that its creators somehow choose the information that is fed to the machine, build the structure of evaluations, self-analysis, monitoring, select experts, on the basis of whose remarks further infrastructure is built. The popular language learning app Duolingvo already works with GPT 4.0 technology, and the government of Iceland has initiated a program to preserve the Icelandic language in cooperation with OpenAI based on GPT 4.0.

Returning to the open letter, it’s a perfect illustration of what happens to information as it moves from medium to medium. The letter calls for a halt to the use of more advanced technologies than GPT 4.0 until regulations are created for data selection, self-analysis, correction, and rules of AI usage. However, some specialists have already disputed the document, claiming they didn’t sign it, and some signature certificates are invalid. Signatures were collected with the argument that Elon Musk even signed it, so why don’t you? Mr. Musk, as we know, was one of the co-founders of OpenAI, refused external regulation for the AI used in Tesla cars, and sponsors the Institute, known for its black scenarios of the future.

It’s evident that the most effective way to protect ourselves is to stay informed about what’s happening.

Let me quote Yuval Noah Harari (who seemed to be among those who signed the open letter) on what he actually writes on his official channels: “The danger is that if we continue to invest too much in the development of AI and too little in the development of human consciousness, such a powerful technology can only serve to enhance human stupidity.”

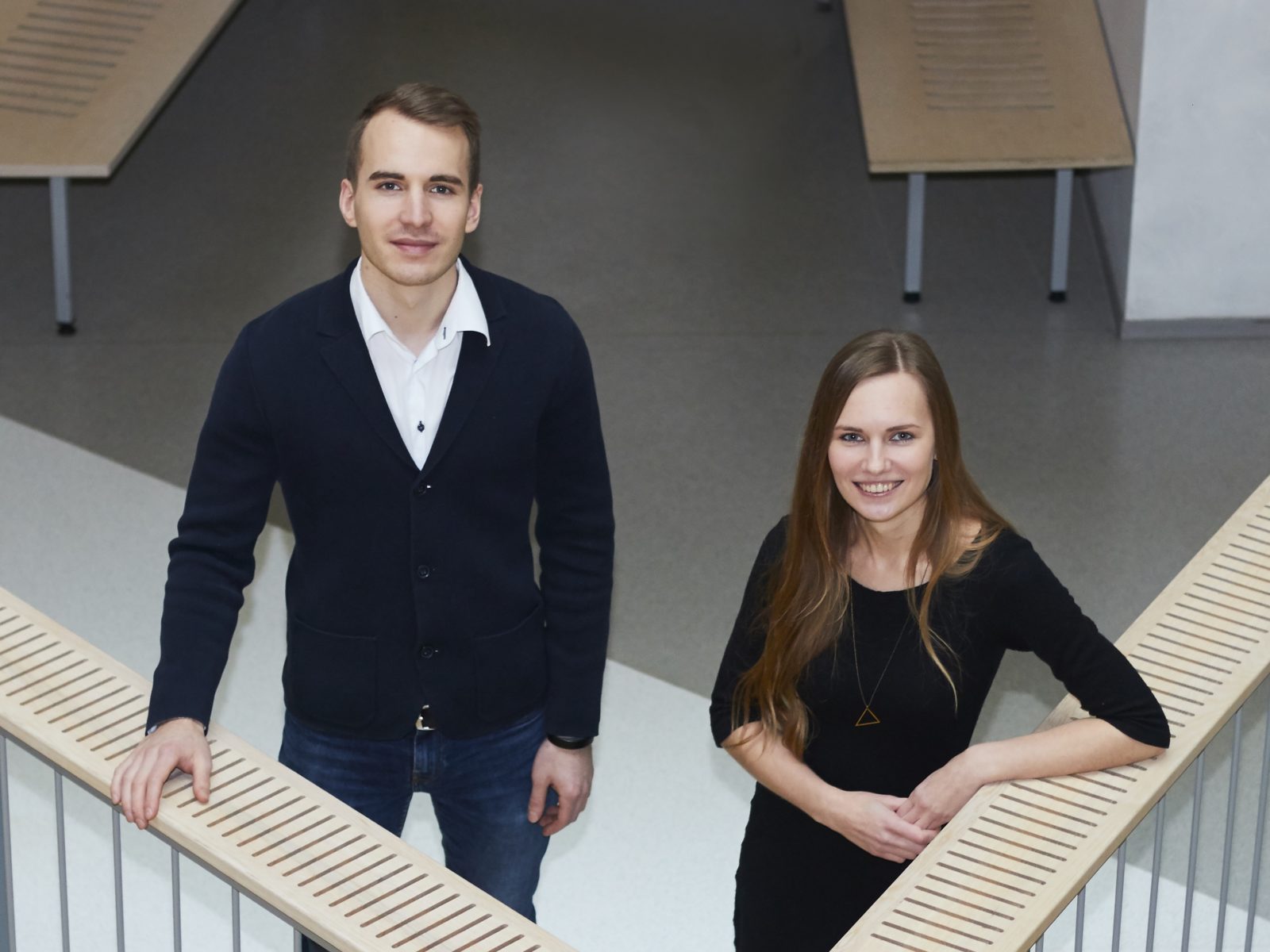

Success story: Gelatex joins Techstars startup accelerator

3 years of development

“We have developed our technology over the past 3 years. Several global luxury brands are interested in using our textiles. We feel that now is the time to accelerate our activities to bring the product to market faster,” comments Gelatex co-founder Mari-Ann Meigo Fonseca. “The US is at the forefront of bringing disruptive innovations to market. We believe that participating in the accelerator will help us to do the same.”

Initial investment of 120 000 USD

Gelatex co-founder and CTO Märt-Erik Martens adds that Gelatex will benefit tremendously from industry connections with The Heritage Group. “Besides gaining the tools to develop sales and attract investment, we hope the program will be able to dramatically accelerate our product development through partnerships via this global company,” says Martens. Finally, the accelerator program also offers Gelatex an initial investment of 120 000 USD to expand product development and operations.

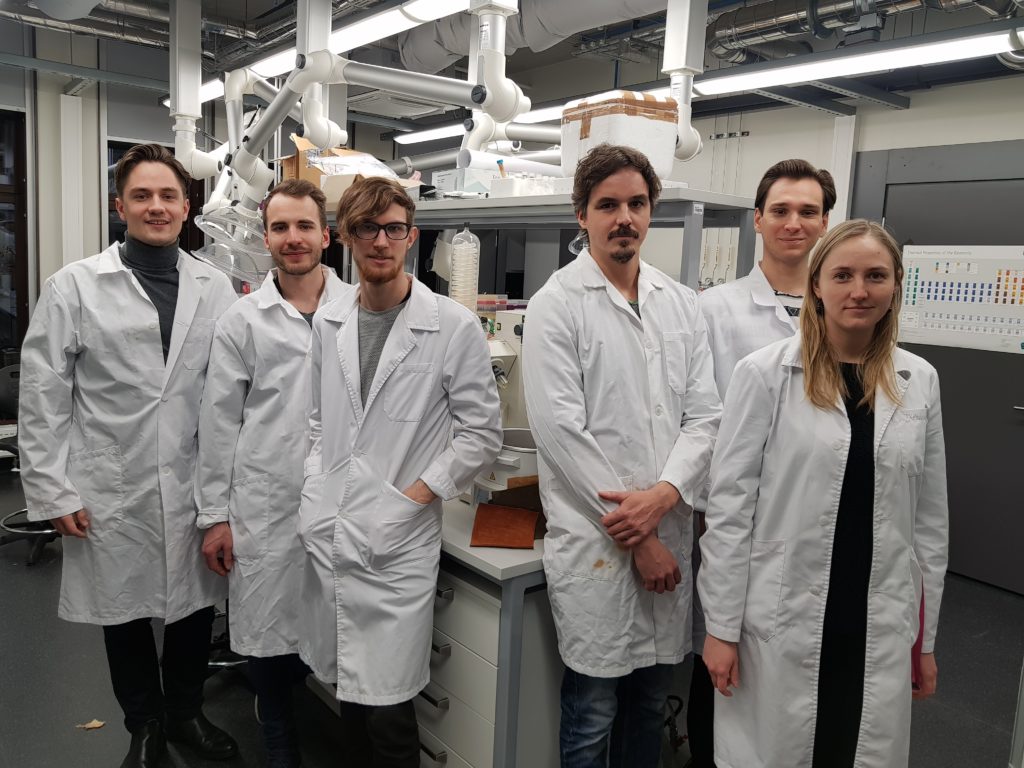

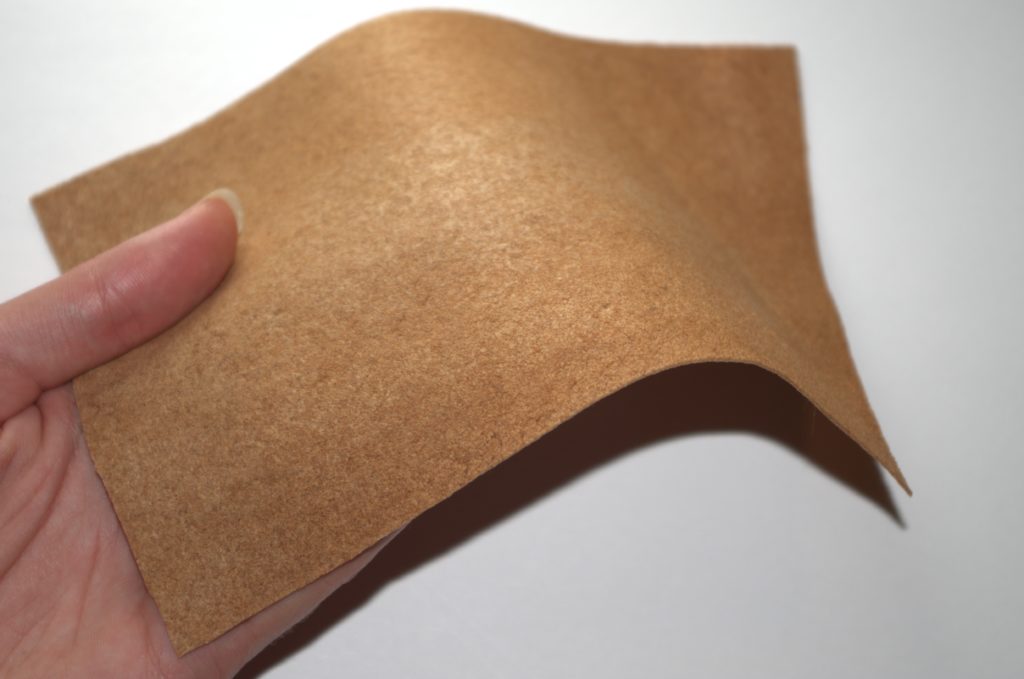

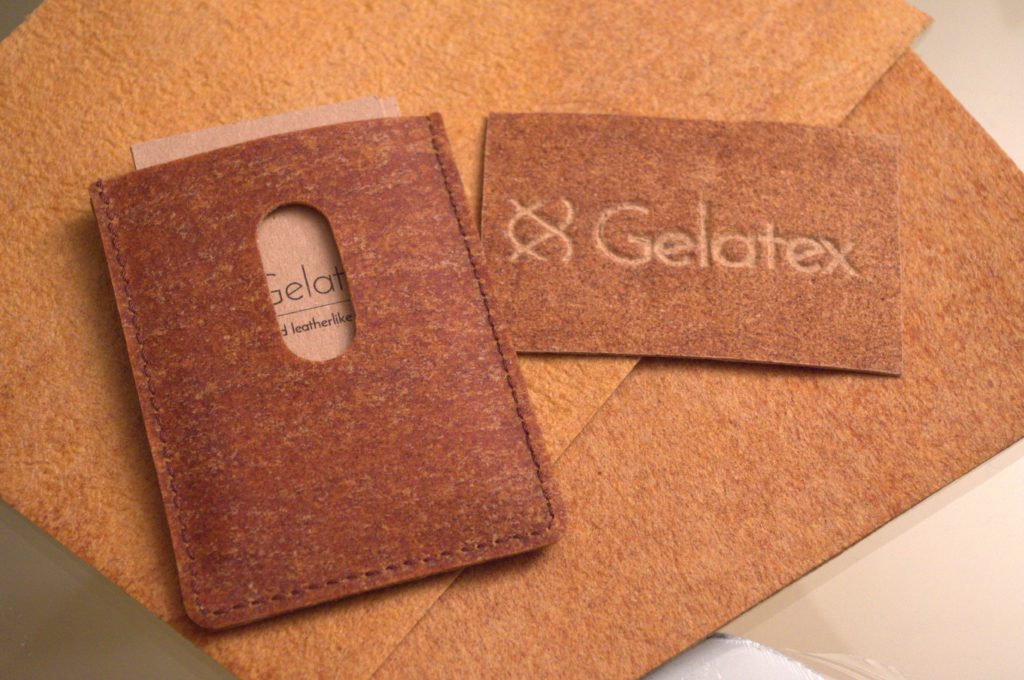

Eco-friendly materials for everyone

Currently Gelatex is tackling the problem that 95% of all leather today is made with toxic chemicals and eco-friendly solutions are not scalable. It has developed the first non-toxic, eco-friendly textile that is chemically identical to leather and easy produce in mass-scale. The material is made from gelatin derived from the waste material from the leather and meat industry, adding value for the an abundant waste material. Material is chemically identical to leather but comes in rolls, it is made using no toxic chemicals and its production is fast and environmentally friendly.

Besides Prototron Gelatex has been supported by Estonian Ministry of Environment, Climate-KIC, Enterprise Estonia, Archimedes, Horizon2020 SME Instrument and others.

facebook

instagram

twitter

linkedin